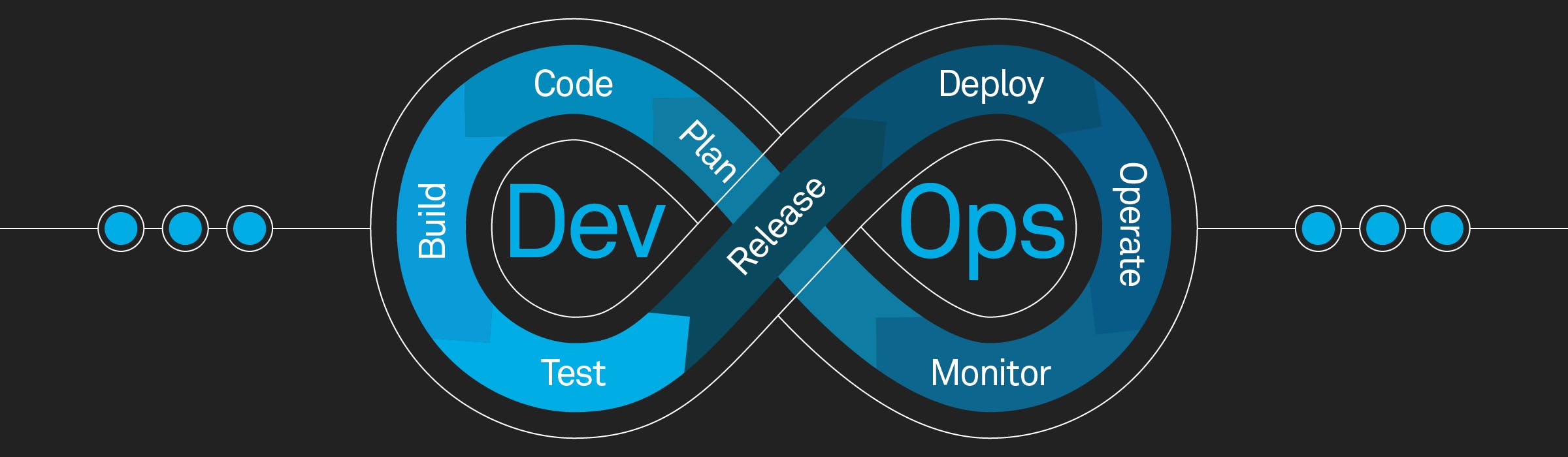

DevOps is an organizational model that combines development and operations teams to enable quicker application development and more straightforward maintenance of current installations. DevOps promotes shorter, more controlled iterations by assisting firms in strengthening relationships between Dev, Ops, and other firm stakeholders through the adoption of best practices, automation, and new tools. This project aims at creating a solution for a company that uses DevOps practices to improve its software lifecycle. They want a solution that will perform orchestration from one cloud to another using this DevOps Pipeline.

BUSINESS NEED

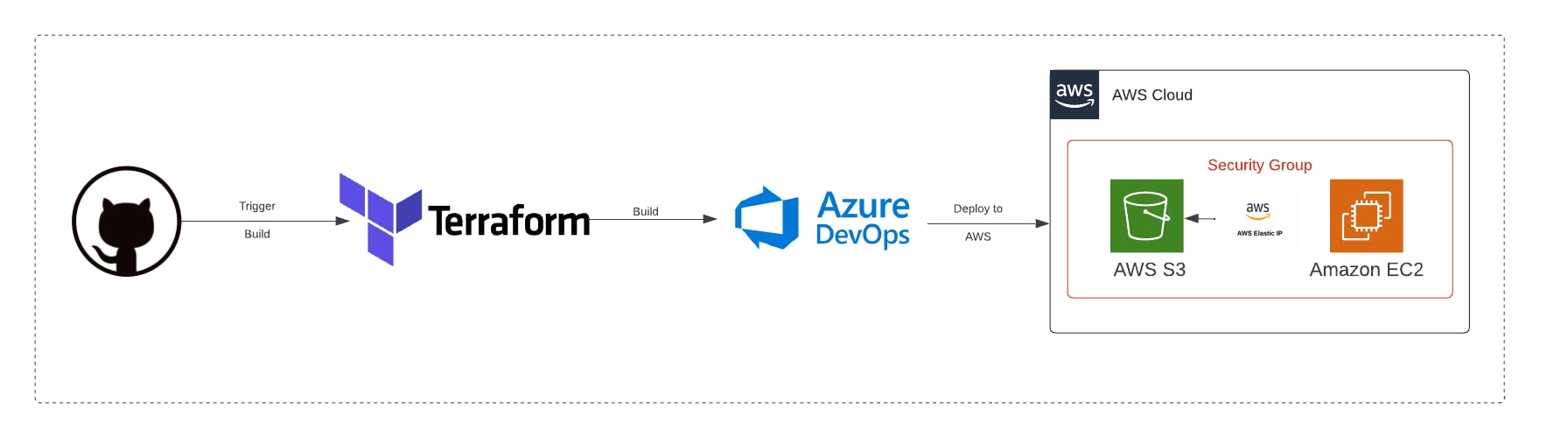

This solution aims at a use case where a company uses AWS and Azure as its cloud providers. This company uses Azure DevOps to manage its software lifecycle. They do not want to use Jenkins or Team City to build or orchestrate their infrastructure; instead, they want to leverage Terraform to provision their infrastructure using automation.

SOLUTION

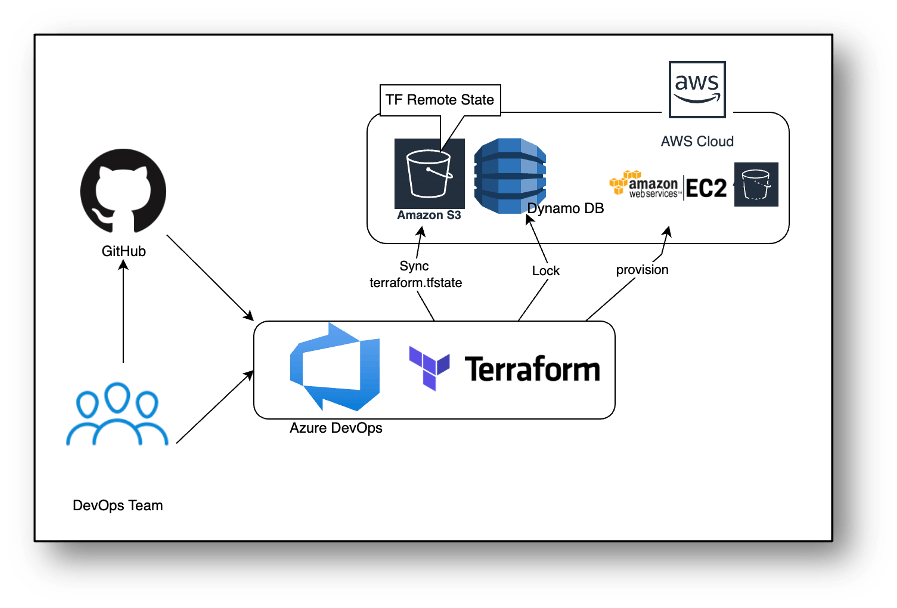

To implement this use case, we need to create an infrastructure as code using Terraform, where we will provision multiple AWS services (EC2, S3, Security Group). We will be automating the infrastructure development setup for AWS using the Azure DevOps Pipeline. We will also use S3 buckets to store Terraform state information, and DynamoDB tables will provide lock capability while performing operations. Our code repository will be GitHub, and we will link it with the Azure DevOps Pipeline.

CI/CD Continuous Integration & Deployment with DevOps

Using Azure DevOps, we create various jobs, which will be initiated as soon as we make a release. Each job will define multiple stages of Terraform states such as "init," "planning," "applying," and "destroying." After these jobs are run, another job will deploy the services inside AWS. After the project is complete, another release will delete the resources from AWS.

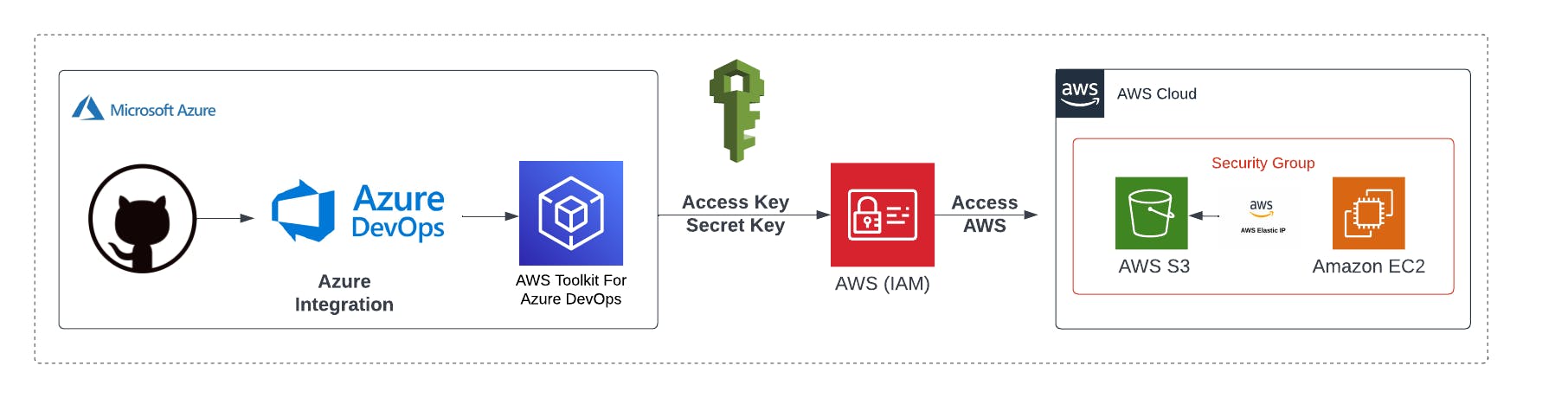

Security Architecture

From a security perspective, the data coming from the VCS (GitHub) will be encrypted by Azure. Azure allows us to integrate different VCSs into its DevOps platform. We must sign in using our GitHub credentials to establish a secure connection.

When the code is executed in Azure, the deployment will be carried out on AWS. To access the AWS account, the AWS toolkit allows Azure DevOps to sign in to an AWS account with programmatic access (Access Key & Secret Key)

Solution Architecture

The DevOps team will access the Terraform code from GitHub and create jobs inside the release pipeline.

backend.tf

terraform {

backend "s3" {

bucket = "mydev-tf-state-bucket"

key = "main"

region = "us-east-2"

dynamodb_table = "my-dynamodb-table"

}

}

Jenkinsfile.tf

pipeline {

agent any

stages {

stage('Checkout') {

steps {

checkout scm

}

}

stage ("terraform init") {

steps {

sh ("terraform init -reconfigure")

}

}

stage ("plan") {

steps {

sh ('terraform plan')

}

}

stage (" Action") {

steps {

echo "Terraform action is --> ${action}"

sh ('terraform ${action} --auto-approve')

}

}

}

}

main.tf

provider "aws" {

region = var.aws_region

}

resource "aws_vpc" "main" {

cidr_block = "172.16.0.0/16"

instance_tenancy = "default"

tags = {

Name = "main"

}

}

#Create security group with firewall rules

resource "aws_security_group" "jenkins-sg-2022" {

name = var.security_group

description = "security group for Ec2 instance"

ingress {

from_port = 8080

to_port = 8080

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# outbound from jenkis server

egress {

from_port = 0

to_port = 65535

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

tags= {

Name = var.security_group

}

}

resource "aws_instance" "myFirstInstance" {

ami = var.ami_id

key_name = var.key_name

instance_type = var.instance_type

vpc_security_group_ids = [aws_security_group.jenkins-sg-2022.id]

tags= {

Name = var.tag_name

}

}

# Create Elastic IP address

resource "aws_eip" "myFirstInstance" {

vpc = true

instance = aws_instance.myFirstInstance.id

tags= {

Name = "my_elastic_ip"

}

}

s3.tf

resource "aws_s3_bucket" "my-s3-bucket" {

bucket_prefix = var.bucket_prefix

acl = var.acl

versioning {

enabled = var.versioning

}

tags = var.tags

}

sonar.tf

resource "aws_instance" "mySonarInstance" {

ami = "ami-0b9064170e32bde34"

key_name = var.key_name

instance_type = "t2.micro"

vpc_security_group_ids = [aws_security_group.sonar-sg-2022.id]

tags= {

Name = "sonar_instance"

}

}

resource "aws_security_group" "sonar-sg-2022" {

name = "security_sonar_group_2022"

description = "security group for Sonar"

ingress {

from_port = 9000

to_port = 9000

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

# outbound from Sonar server

egress {

from_port = 0

to_port = 65535

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

tags= {

Name = "security_sonar"

}

}

# Create Elastic IP address for Sonar instance

resource "aws_eip" "mySonarInstance" {

vpc = true

instance = aws_instance.mySonarInstance.id

tags= {

Name = "sonar_elastic_ip"

}

}

variables.tf

variable "aws_region" {

description = "The AWS region to create things in."

default = "us-east-2"

}

variable "key_name" {

description = " SSH keys to connect to ec2 instance"

default = "myJune2021Key"

}

variable "instance_type" {

description = "instance type for ec2"

default = "t2.micro"

}

variable "security_group" {

description = "Name of security group"

default = "jenkins-sgroup-dec-2021"

}

variable "tag_name" {

description = "Tag Name of for Ec2 instance"

default = "my-ec2-instance"

}

variable "ami_id" {

description = "AMI for Ubuntu Ec2 instance"

default = "ami-020db2c14939a8efb"

}

variable "versioning" {

type = bool

description = "(Optional) A state of versioning."

default = true

}

variable "acl" {

type = string

description = " Defaults to private "

default = "private"

}

variable "bucket_prefix" {

type = string

description = "(required since we are not using 'bucket') Creates a unique bucket name beginning with the specified prefix"

default = "my-s3bucket-"

}

variable "tags" {

type = map

description = "(Optional) A mapping of tags to assign to the bucket."

default = {

environment = "DEV"

terraform = "true"

}

}

As the Terraform code is executed, the Terraform state will be stored inside an S3 bucket that is already created; it uses a LockID in DynamoDB. The provisioning of resources is carried out on the AWS account.

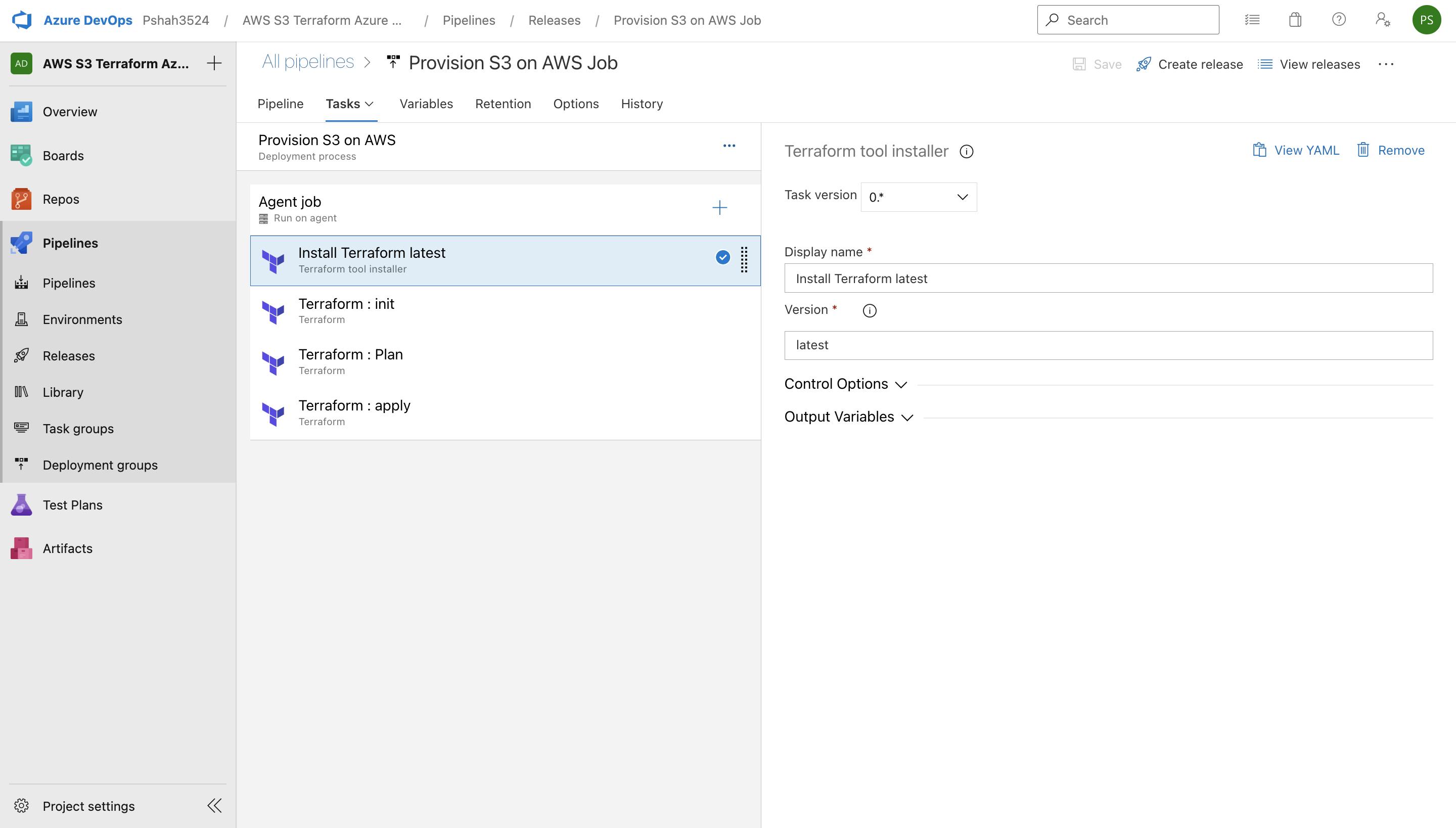

Install Terraform Task on Azure DevOps Pipeline

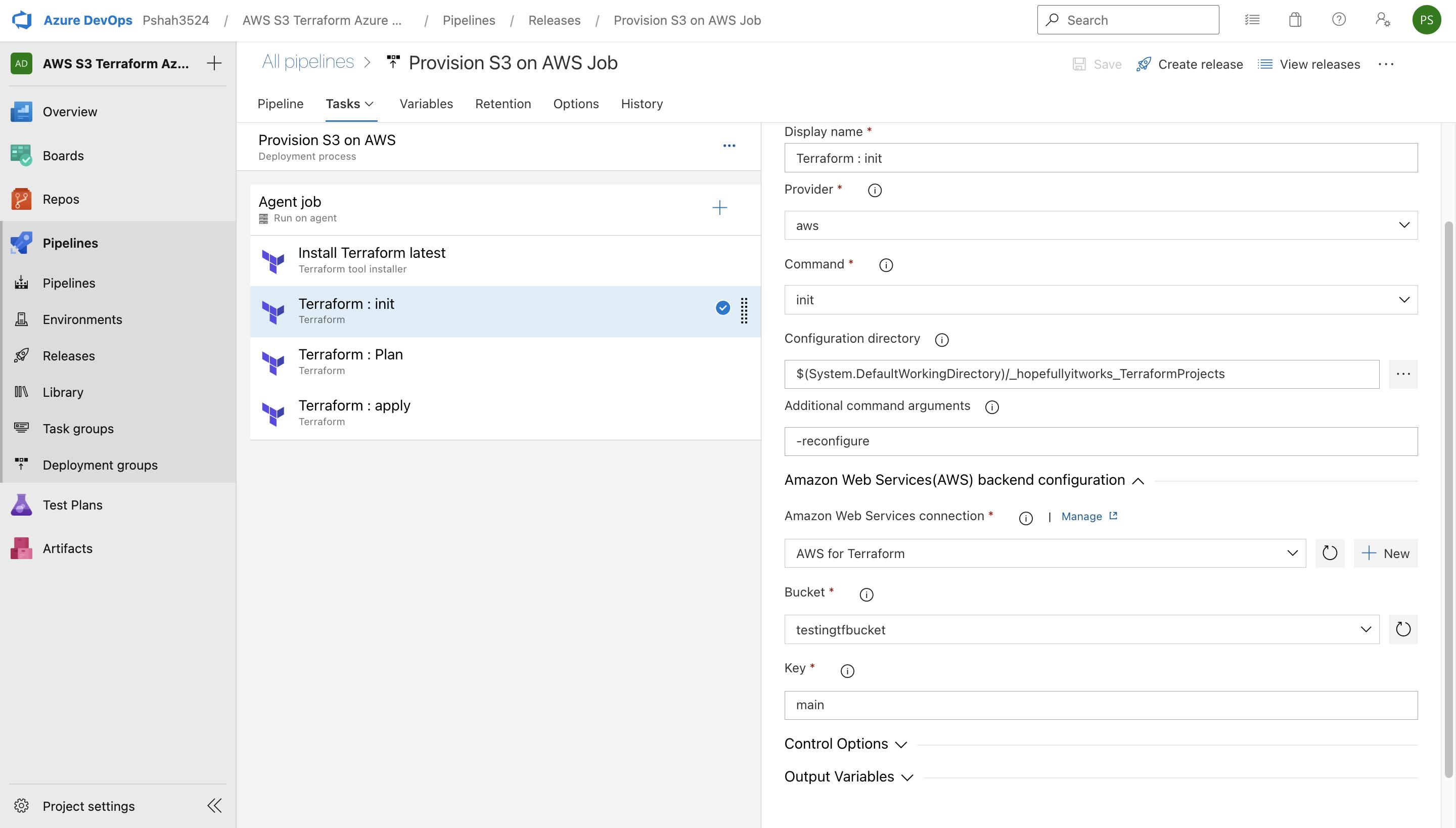

Terraform INIT stage on Azure DevOps Pipeline

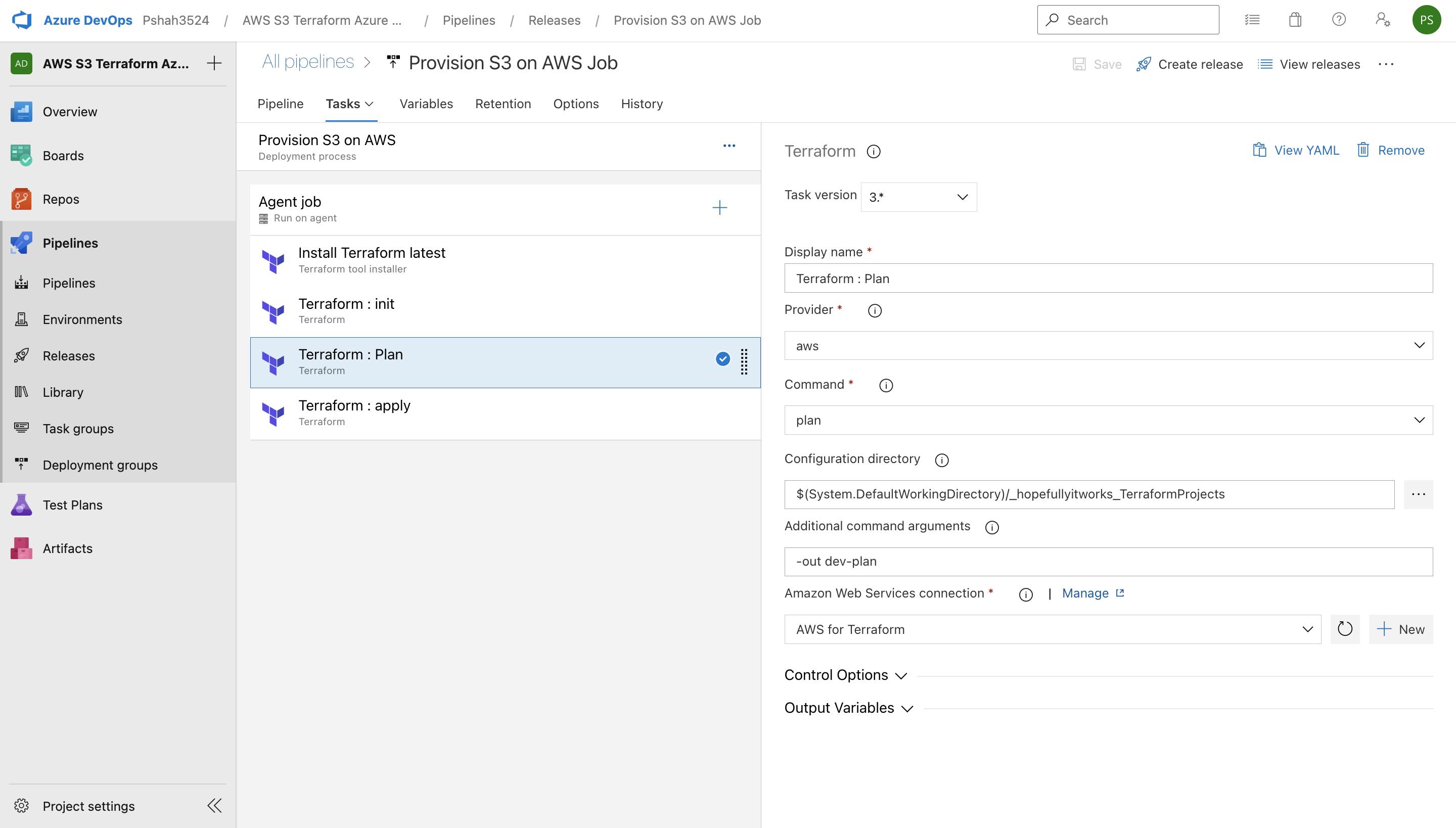

Terraform Plan stage on Azure DevOps Pipeline

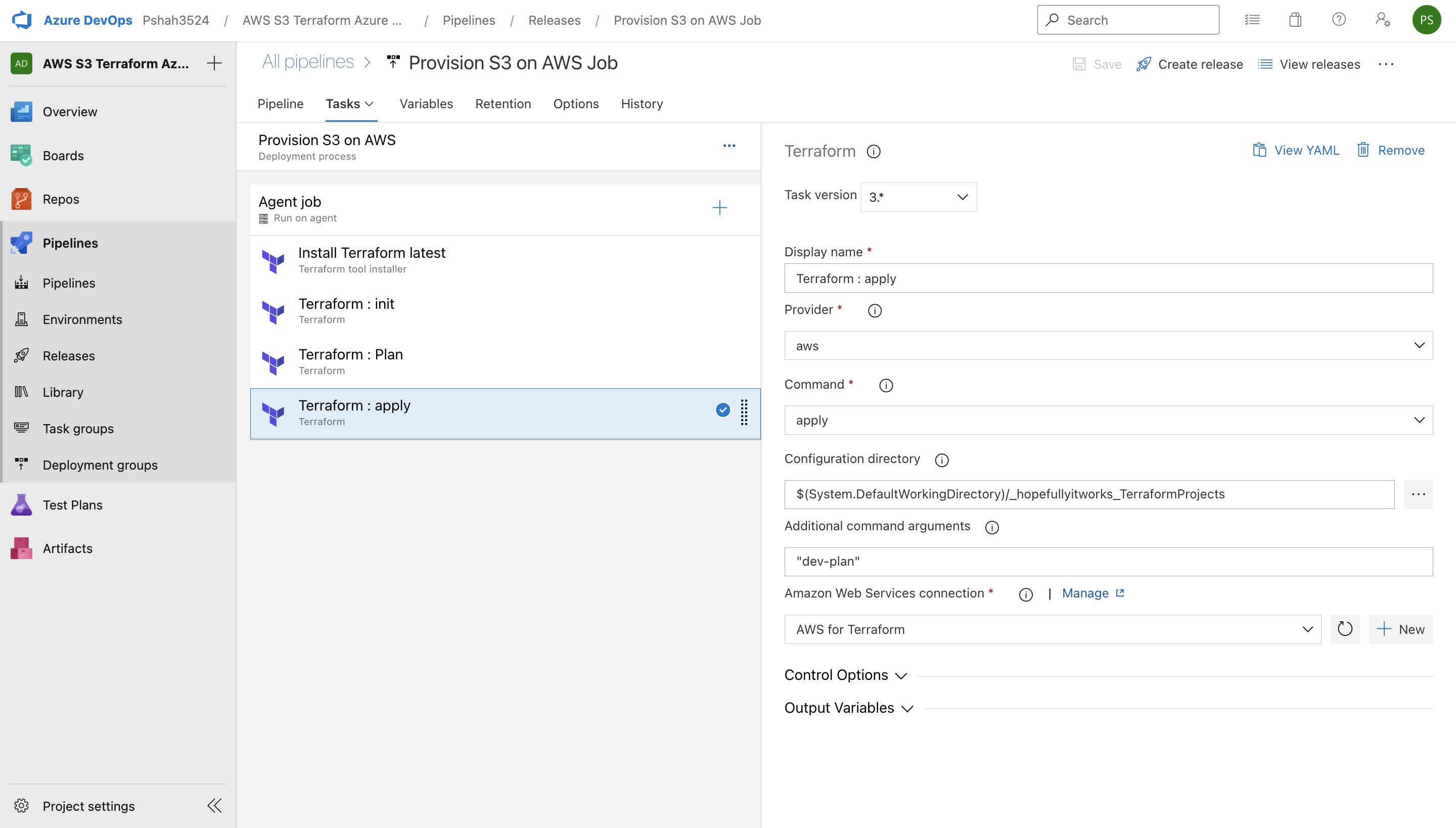

Terraform Apply stage on Azure DevOps Pipeline

After this release pipeline is launched, a Windows server is assigned, and all the tasks will be executed. It will initialize the job, download artifacts, install Terraform, run Terraform init, plan, apply, and finish the job.

After all, this has been completed, and the resources will be created on your AWS Console.